Quantum Mechanics and Objective Reality

by Frank Luger

by Frank Luger

The main features of quantum theory, such as the wave function, the uncertainty principle, wave-particle duality, indeterminacy, probabilistic behavior, exchange forces, spin, quarks and their various flavors and charms, etc. are so counterintuitive as to defy human intuition and common sense. It is often argued, that since they are abstractions, one way or another, maybe they are figments of overactive imaginations. Not quite, counters the theoretical physicist, because although there’s a tough road from mathematical modeling to scientific fact, there’s overwhelming experimental and other evidence in favor of quantum mechanics as objective reality.

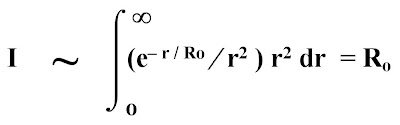

In order to take a look at some of the considerations which allow one to state that the world at the tiny magnitudes of microphysics is as proposed by quantum theory, it may be instructive to deal with the wave function as one of the main representatives in question. Although a mathematical abstraction, the wave function corresponding to a physical system contains all the information that is obtainable about the system. For example, if a moving particle acted on by a force is represented by a wave function (psi), then measurement of a physical quantity, such as momentum, always yields an eigenvalue of the associated momentum operator. In general, the outcome of the measurement is not precisely predictable and is not the same for identically prepared systems; but each possible outcome, or eigenvalue, has a certain probability of occurring.

This probability is given by the squared modulus of the scalar product of the normalized wave function (psi), or state vector, and the eigenvector of the operator corresponding to that particular eigenvalue. Furthermore, not all operators representing physical quantities commute- that is, sometimes AB ≠ BA, where multiplication of the operators A and B corresponds to making two measurements in the order indicated. These unusual but unambiguous postulates, which associate probabilities with geometric properties of vectors in an abstract space, have great predictive and explanatory value and, at the same time, many implications that confound our intuition.

Because of the usefulness of the wave function in generating experimentally testable predictions, it appears that a mathematical abstraction here takes on a reality equivalent to that of concrete events, as envisioned by Pythagorean and Platonic philosophies. However, there is a direct connection between the abstraction and observable events, and there has not been much tendency in physics to place the wave function in some realm of ideal forms, platonic or otherwise.

A similar state of affairs already existed in classical electrodynamics, and some physicists remarked that Maxwell’s laws were nothing more than Maxwell’s equations. Perhaps because radiation always had been regarded as immaterial with wave properties, this point of view was not quite as disturbing as it became when matter waves had to be considered. In both cases, however, there does appear to be a problem in explaining how mathematical symbolism can do so much.

Platonic implications can be avoided if we look more closely at the actual, concrete role of the wave function in the theory. If viewed as a conceptual tool, rather than something given, the idea of a wave function containing information about observable events is not so strange. The meaning of the wave function is defined by its role in the theory, which after all is a matter of theorists interacting with events. A clue to this purely conceptual, computational role is the fact that a wave function can be multiplied by an arbitrary phase factor without changing its physical significance in any way. Also, the fact that it is a complex-valued function discourages one from interpreting it as something with spatial and temporal wave properties.

As the search for causes has diminished in modern physics, the success of microphysics in explaining the properties of complex structures such as atoms, molecules, crystals, and metals has increased markedly at the same time. If causality is conceived, as it once was, in terms of collisions among particles with well-defined trajectories, then it has no meaning at the quantum level. However, a remarkable consistency in the evolution of identical structures with characteristic properties is apparent in nature. Quantum mechanics goes far toward explaining how these composite systems are built up from more elementary components. Although the once predominant mechanistic view of colliding particles is no longer tenable, its decline has been accompanied by success in the actual achievement of its original aims.

Terms such as causality and determinism still are used occasionally by physicists, but their connotations are quite different from what they were in earlier times. The formalism of quantum theory implies that determinism characterizes states, but not observables. The state of the system described by a wave function (psi) evolves in time in a strictly deterministic manner, according to the Schrödinger equation, provided that a measurement is not made during that period of time. This usage of determinism actually is equivalent to the statement that the Schrödinger equation is a first-order differential equation with respect to time.

In contrast, if at some instant a measurement of a physical quantity is made, the possible values that might be obtained are represented by a probability distribution. Furthermore, a measuring instrument introduces an uncontrollable disturbance into the system, and, afterwards, that system is in a different state that is not precisely predictable. This situation led Max Born (1882-1970) to make a famous statement that the motion of particles conforms to the laws of probability, but the probability itself is propagated in accordance with the law of causality. The initial astonishment produced by this unforeseen turn of events was shortly followed by an even greater astonishment when these unconventional ideas proved to be extremely workable in practice.

Consider more closely the role of causality and of probability in the theory. The relationship (psi)1 → (psi)2, where (psi)1 and (psi)2 are states at successive instants in time, is completely determined in the theory, provided no measurement takes place during the interval. Moreover, if a measurement is made at some instant, the relationships (psi)1 → f(x) and (psi)2 → g(x), where f(x) and g(x) are probability distributions of an observable, also are completely determined. The new and strange features of the theory are embodied in the facts that (a) these probability distributions, in general, have nonzero variance, and (b) if the relation (psi)1 → f(x) is in fact exhibited by making a measurement, then the relation (psi)1 → (psi)2 no longer holds.

It is difficult to grasp intuitively that the probabilities referred to are those of measures that might be obtained on an individual system using a perfectly reliable instrument and seemingly come from nowhere. Expressed mathematically, the only appropriate probability space corresponding to the probability distribution of a quantum mechanical observable is provided by the real line, its measurable subsets, and the probability measure determined by the wave function; and that structure is not, as is usually the case, induced by an underlying probability space having physical significance. Despite intensive search over many decades, no such underlying probability space has ever been found, and it is now generally agreed that one does not exist. This search in fact resembled somewhat the frustrating attempts in the XIXth century to find an ether, a hypothetical universal space-filling medium propagating radiation.

Nevertheless, when matters are expressed as above, it appears that quite a lot about the theory is deterministic. Furthermore, this viewpoint discourages the tendency to confuse indeterminacy with lack of ability of scientists effectively to make contact with events. Probability distributions of measurements are objective, concrete things. Determinism fails when applied to the concept of an elementary corpuscle simultaneously having a definite position and a definite momentum, conditions never observed experimentally.

Quantum theory, as emphasized previously, was applied with excellent results to a broad range of phenomena; for example, the periodic table of the elements at last became understandable, and the foundations of all inorganic chemistry, and much organic chemistry and solid state physics were firmly established. Contrary to the expectations of some critics, the theory definitely has not encouraged a view of the world ruled by a capricious indeterminacy, but, on the contrary, has greatly enchanced the coherence and explanatory power of science.

Still, the above turn of events in the age-old problem of causality had not been anticipated. The fact that the implications of the theory conflicted in such a radical way with previous philosophical views was a departure from tradition that probably to this date has not been fully assimilated.

Eventually, one may hope, concepts such as causality, system, interaction, and interdependence will be extended and enriched by the findings of quantum physics. Perhaps we are already beginning to see this happen and to appreciate that the new viewpoint does not entail as much of a loss as we once believed. In both classical physics and quantum physics a list of well-defined dynamical variables is associated with each system, and in some respects the quantum mechanical description by state vectors is analogous to a phase-space representation in classical statistical mechanics. Formally, the dynamical variables play a different role in the two theories, but in both cases their specification exhausts the observable properties of the system. The probabilistic aspects of quantum theory, as stressed before, certainly do not imply an inability to find lawfulness and orderliness in nature.

Although quantum mechanical predictions of, for example, position are inherently probabilistic, in many instances a particle is sufficiently localized that probabilities of it appearing outside a restricted range are essentially zero, that is, the dispersion of the distribution is small. It becomes meaningful, for example, to speak of shells and subshells in atomic structure. Overall, it appears that abandonment of the rather limited classical cause-and-effect scheme is a minimal loss compared to the far greater gains achieved by the theory as a whole.

Like many ideas in quantum theory, the celebrated Heisenberg uncertainty principle becomes less mysterious if examined in its concrete role in the theory. The uncertainty principle is not an insight which preceded the theory, but is built into its structure, that is, it can be derived from the abstract formalism. Heisenberg’s matrix mechanics and its success in accounting for experimental results came first; the uncertainty principle and its implications then were recognized.

Essentially, this principle means that the dispersions, or variances, of probability distributions of noncommuting observables are constrained by one another, or, alternatively, that a function and its Fourier transform cannot both be arbitrarily sharp. The physical significance of this result is that measurements of certain pairs of observed quantities- such as position and momentum, or time and energy- cannot simultaneously be made arbitrarily accurate. The principle has been confirmed, many times, by an overwhelming mass of evidence. Accordingly, the principle is an objective property of events that must be confronted in future advances of our understanding of the physical world. Much the same is true about all the other main features of quantum theory.

Although quantum mechanics and the blurred mode of existence that it reveals represent current frontiers in the direction of the infinitesimally small, it is generally acknowledged that this is not the final answer. Quantum reality is reality, to be sure, but it is still very much a virtual reality inasmuch as it refers to states of affairs relative to Man. As such, it is reasonable to expect that it has a source and a destination, being perhaps an integral albeit temporal phenomenon of an underlying ultimate reality. That is, quantum mechanics is objective reality; but it remains to be seen where it comes from and where it goes. However, that’s another story.